New User Experience

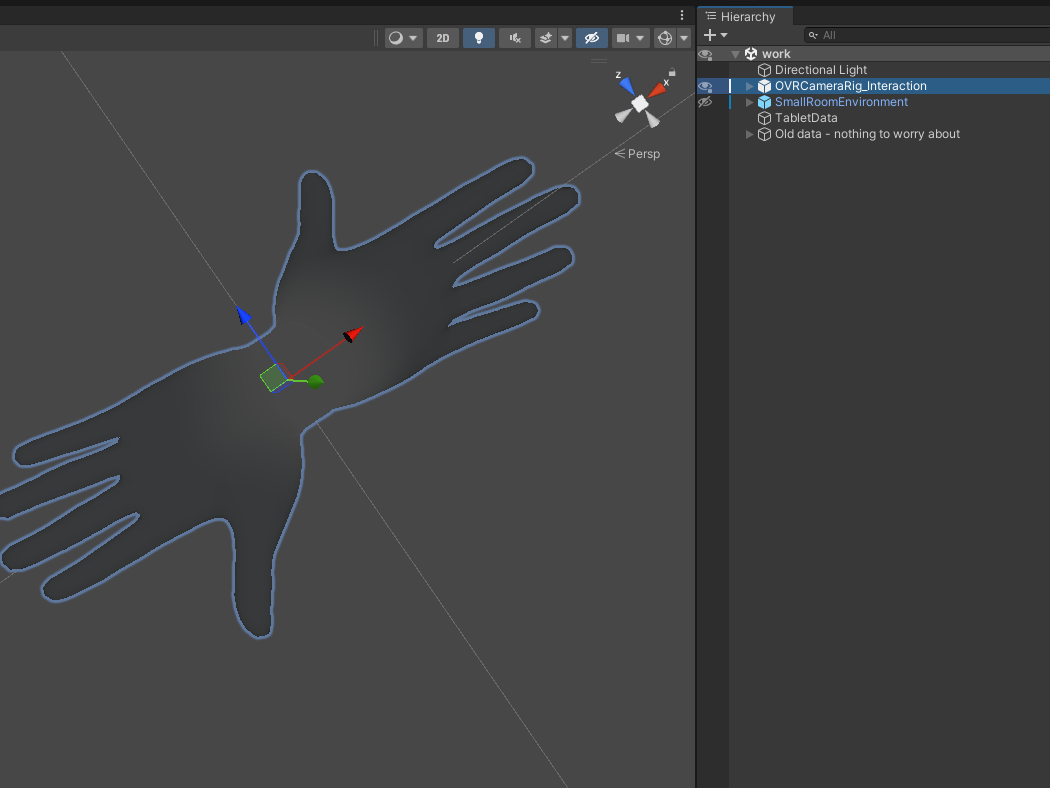

When hand tracking launched on Quest, the interface was novel. Users who turned on the feature for the first time needed thorough education on how to interact with the system with their hands. This new user experience was first built and refined in a grey box Unity prototype which was used in extensive user testing studies. A second scene within the prototype was also used to record the desired avatar motions and camera angles as specific reference pointers to the digital artist creating the final animations.

New User Experience

Quest System Gesture for Hands

Before hand tracking could launch on Quest, users needed to be able to summon and dismiss the system UI just as easily as with controllers. There was also no existing framework for gesture recognition, and I collaborated with the engineering team to aid in the creation of one. I built prototypes which fully explored and helped test, refine, and validate this interaction in preparation for and long after launch.

Quest System Gesture for Hands

Quick Actions Menu for Hands

Early feedback for hands revealed pain points around frequently used system actions which users could not easily use without navigating into settings. My team designed variations of a deployable menu which would extend the system gesture and provide additional quick access to frequently used system actions. I built the prototypes which narrowed down design iterations, explored and refined this interaction, and provided specs, code, and feedback to the engineers who built it into the shell.

Quick Actions Menu for Hands

Recenter with Hands

Users could not easily recenter their devices using hand tracking. The initial implementation of this feature on internal builds resulted in improper recentering, as users had their heads turned slightly to the right to observe the gesture. I started putting a brief cooldown timer into my (unrelated) prototypes on this interaction which gave users a moment to settle before the recenter happened. It influenced Design and was eventually incorporated into the system shell.

Recenter with Hands

Guardian Setup for Hands

It was essential that users be able to set up their Guardian bounds with hands as well as controllers. The standard ray pointer model for hands was optimized for vertical panel surfaces, and did not allow users to accurately aim at and draw on a floor plane to recreate their play space. Alternate pointer models were proposed during the Guardian phase, and I built a prototype which allowed us to test several different pointer models side-by-side in user research, ultimately validating the current implementation.

Guardian Setup for Hands