Updated Keyboard Form

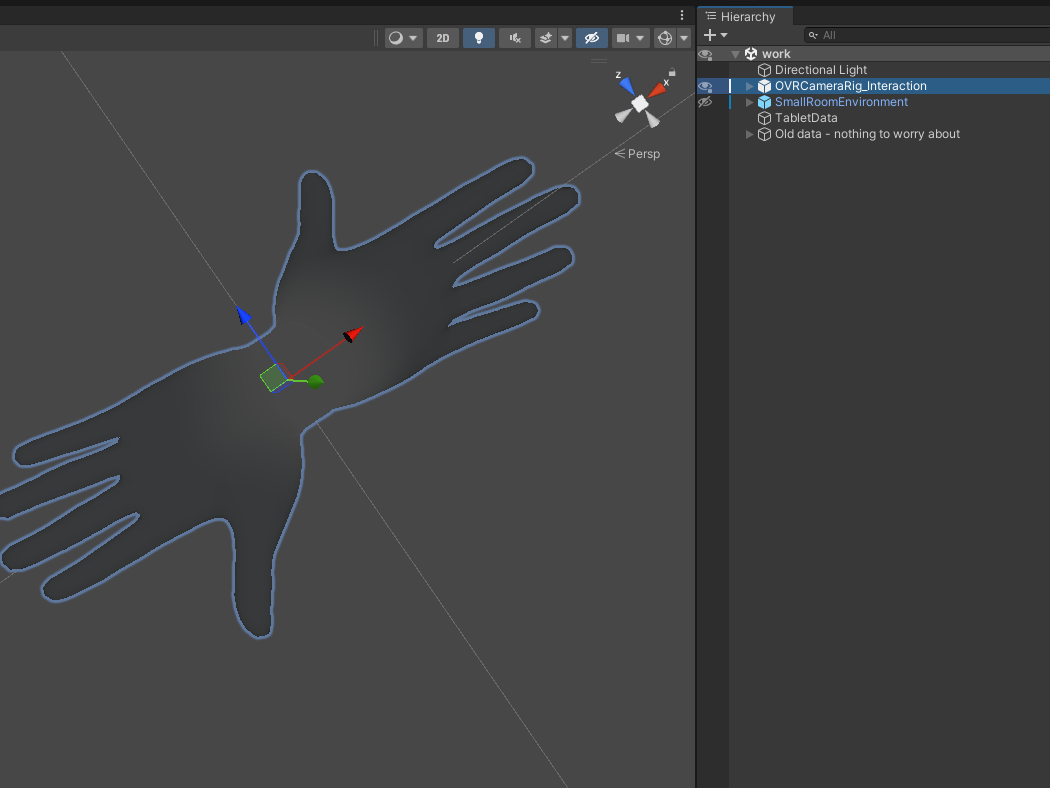

Dozens of design variants were considered for the new form. The original Unity prototype asset I built enabled our design team to quickly test and iterate on multiple variations in key size and spacing, as well as overall size and distance from the user. We tested the new form with a focus on providing an optimal and balanced user experience across all inputs. Our research included direct touch input for both hands and controllers, even though early on we knew it would be some time before direct touch would be available in the system.

Updated Keyboard Form

Typing Speed Test

Meta did not have a robust solution internally to help us determine total corrected error rate of a typing test for the virtual keyboard. I found a research paper on the subject which provided me the formulas needed to calculate total error rate. I rewrote the formulas in C# to accurately record words per minute, as well as total corrected error rate on both our prototype keyboard and the default overlay keyboard found in the Oculus API. This became the backbone of our validation strategy for all future keyboard user studies.

Typing Speed Test

Training the Language Model

With the final keyboard design heading to eng for implementation, a build was needed to gather usage data to train the model according to the specific dimensions and layout of the virtual keyboard.

I designed a build to write the local 2D coordinates of cursor positions and key presses on every frame, as well as any typing errors - into a log file. At the end of the session, the app would automatically push the log file to an internal Meta server at the end of the session.

The language model was used to implement both Auto-Correct and Auto-Complete and Word Suggestion, further increasing the speed and accuracy of text entry.

Training the Language Model

Challenges and Community Support

Many different prototypes were required over a long period of time to support not only the launch, but continued improvements made to the system keyboard in Quest. The prototyping asset needed frequent maintenance as API's and internal toolsets were continuously being updated.

I built the prototyping asset to be so easy to use. All you had to do was drop it into the scene. I also curated it in Reality Lab's internal Unity Package server where it was widely used by other prototypers in the org.

Challenges and Community Support